Security and Compliance

Security is a critical component in earning and maintaining the trust of our platform users.

Data Security

Data Encryption

Data encryption at rest and in transit depends on the storage service providers for SeaweedFS and PostgreSQL. In the Kubernetes deployment, the Qalita Helm Chart does not include encryption configuration by default.

It is the responsibility of the system administrator deploying the platform to configure encryption for data at rest and in transit. Support from a Database Administrator and a Network Engineer may be required.

At Rest

Storage systems read and write data to storage devices. These systems are referred to as "stateful" because their state is persisted. In most cases, they offer encryption methods for data "at rest", meaning encryption before writing data to the storage and decryption upon reading. This ensures that raw data cannot be exploited if the storage is compromised.

| PostgreSQL | SeaweedFS | Redis |

|---|---|---|

| For PostgreSQL, the database engine configuration must be updated in the Helm chart to enable encryption at rest. Helm PostgreSQL - Database Engine Configuration | SeaweedFS supports blob encryption using a unique key stored in metadata. Since metadata is stored in the Filer Store, and raw data in the Volume Store, a robust setup involves separating these. Filesystem Encryption Configuration | In QALITA Platform, Redis is used as a "stateless" cache without persistent storage, so no specific encryption configuration is needed. |

| To go further, you can explore PostgreSQL's native encryption features PostgreSQL Encryption Options |

In Transit

Encryption in transit refers to the encryption of data transferred over the ports of storage systems:

- 5432 for

PostgreSQL - 8333 for

SeaweedFS - 6379 for

Redis

These systems offer encryption layers over their respective ports.

| PostgreSQL | SeaweedFS | Redis |

|---|---|---|

| Helm PostgreSQL - Transit Encryption | Helm SeaweedFS - Transit Encryption | Helm Redis - Transit Encryption |

| SeaweedFS Security Configuration |

For HTTP servers:

- 3080 for

QALITA Backend - 3000 for

QALITA Frontend - 80 for the

QALITA Documentation Server

A port mapping with an Ingress domain and its TLS certificate is required.

HTTP Request Encryption

HTTP request encryption depends on the network endpoint configuration and the TLS certificates used.

It is the responsibility of the system administrator to configure the network endpoints and provide valid and exclusive TLS certificates to ensure secure communication.

In the Kubernetes deployment, the Qalita Helm Chart requires cert-manager to be properly configured to issue valid certificates for network endpoints.

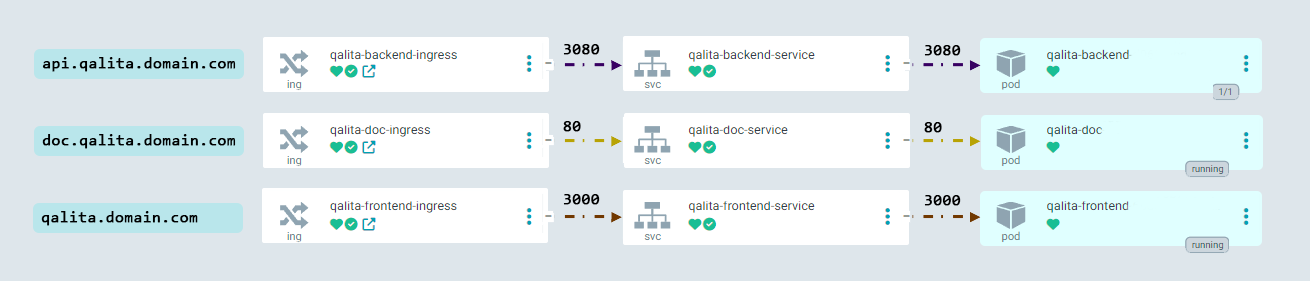

The following endpoints are created:

- frontend |

domain.com--->qalita-frontend-service:3000 - backend |

api.domain.com--->qalita-backend-service:3080 - doc |

doc.domain.com--->qalita-doc-service:80

Metric Management

Metrics are computed by the packs and stored in the platform's relational database. They are used for generating analysis reports, dashboards, and tickets.

Some packs generate metrics that are directly derived from source data, without aggregation. These are stored in the platform’s relational database and should be accessed with the same security level as the original source data.

Be sure to read the pack documentation to understand the sensitivity of the generated metrics.

Data Compliance

Depending on your industry, you may be subject to data compliance standards and regulations.

The platform aims to align with the following standards and regulations, although no guarantee or certification is provided:

Activity Monitoring

Access Management

Platform access is managed through an authentication and authorization system.

To learn more, visit User Management / Roles / Permissions

Log Management

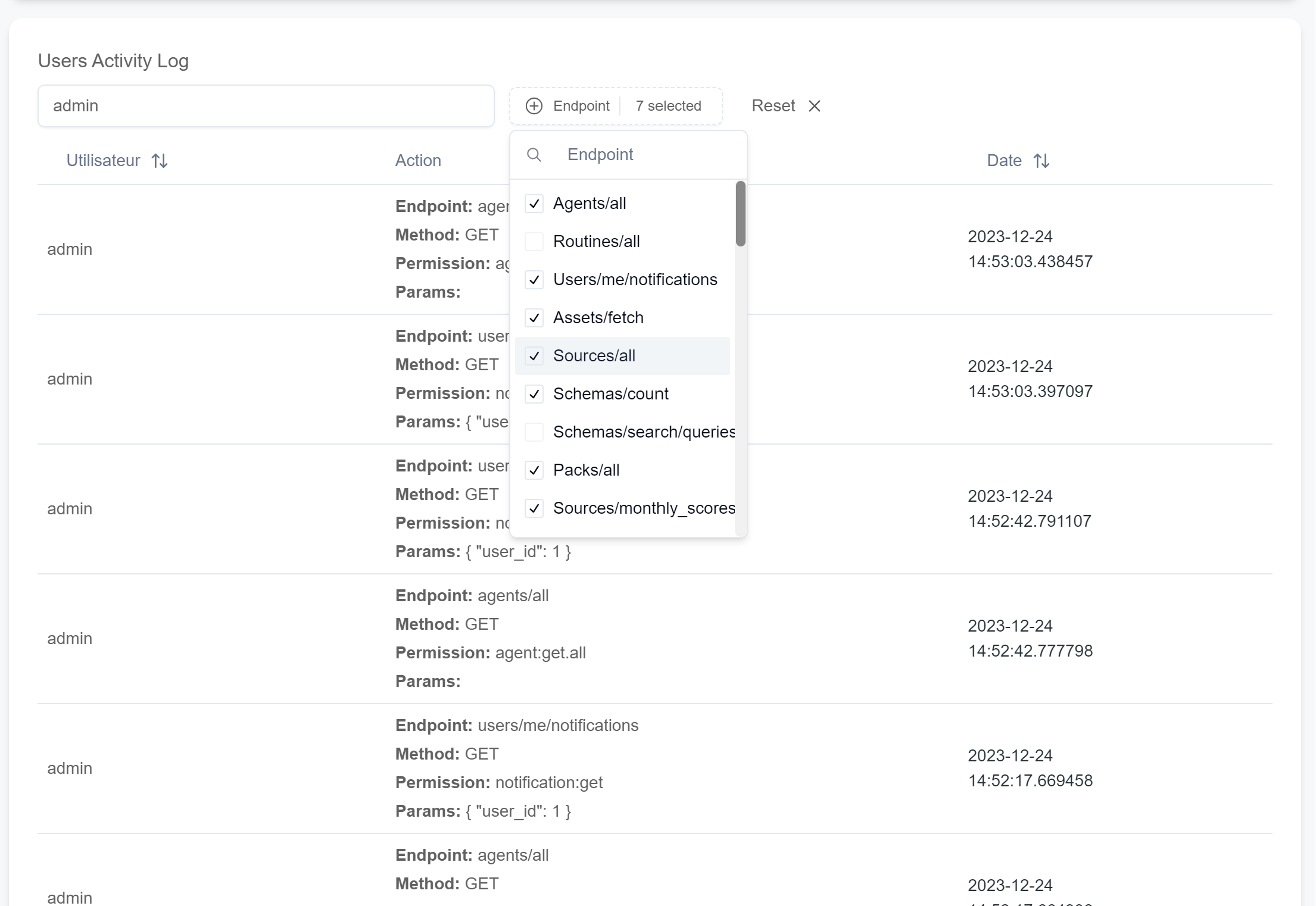

There are two types of logs:

-

User Activity Logs: These logs are stored in the relational database (in the

logstable). They track user actions on the web app and via agents, as the same authentication tokens are used for both.

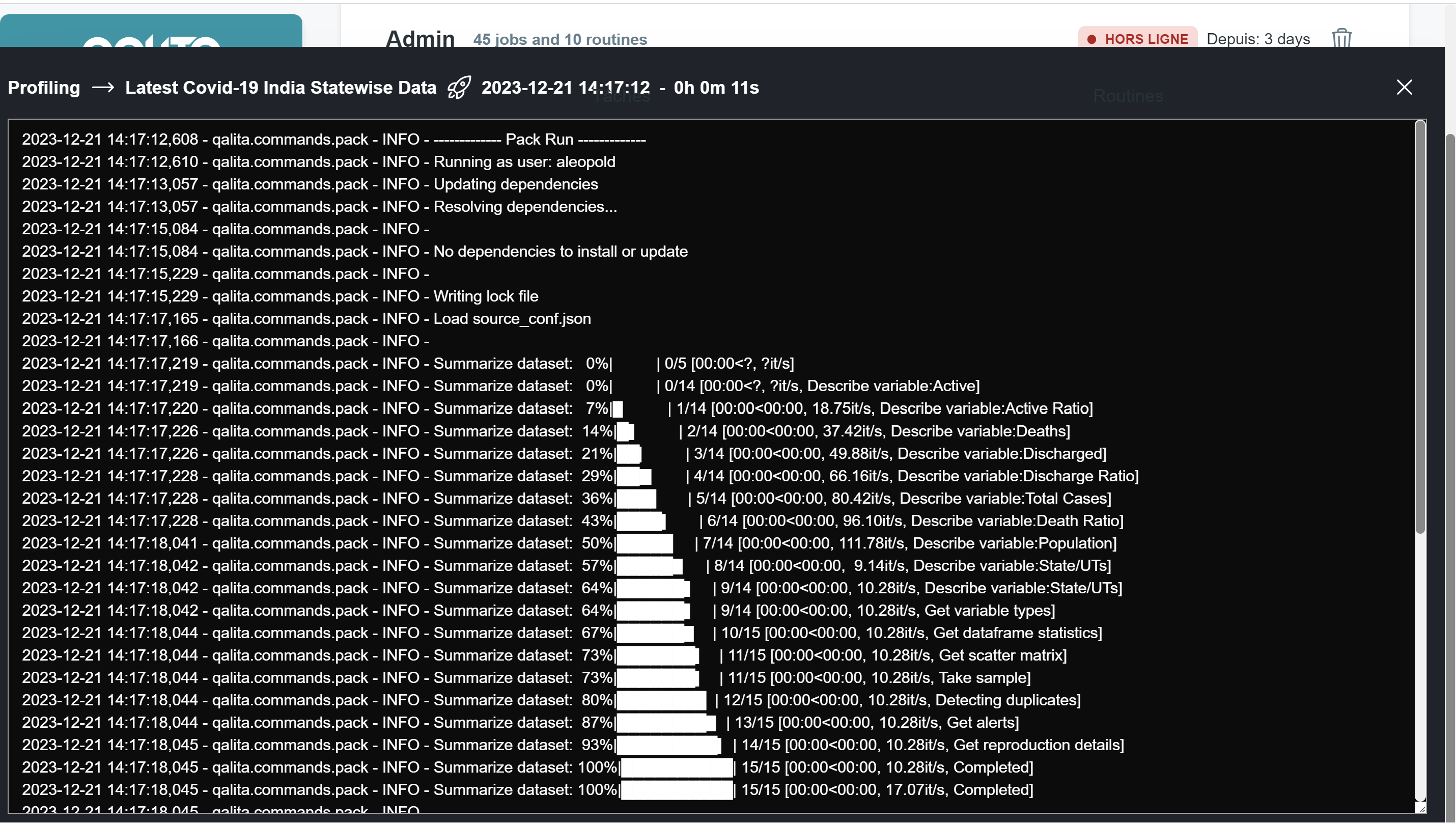

-

Task Execution Logs: These are stored in the SeaweedFS storage system. They help audit how packs interact with data and can be found in the "LOGS" folder on the Agent page or in the SeaweedFS bucket named

logs.

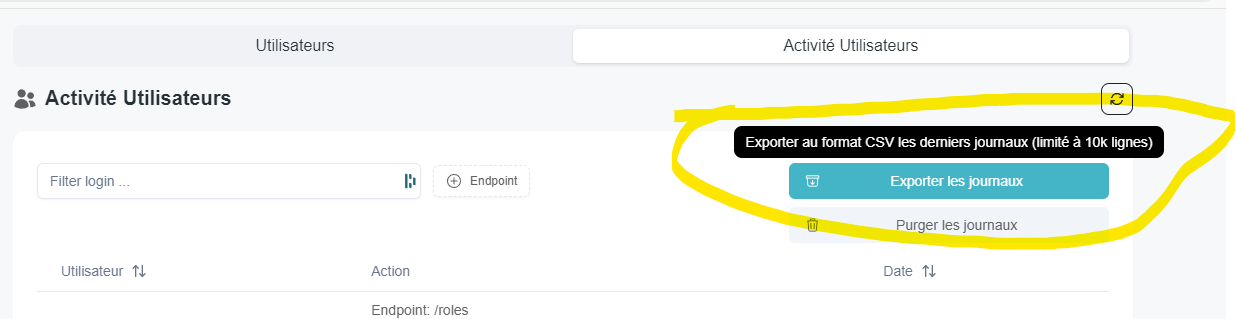

Exporting User Logs

You can export user logs directly from the interface:

You can also export them via SQL from the PostgreSQL database:

SELECT * FROM logs

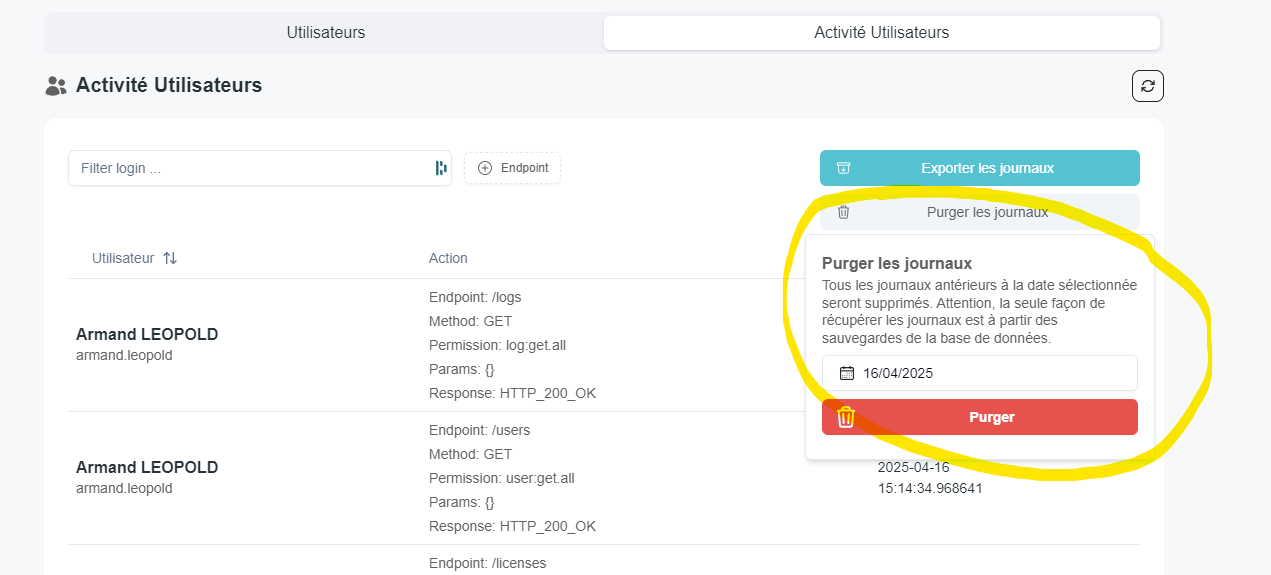

Deleting User Logs

User logs can be deleted directly from the interface :

You can empty the logs table directly from an SQL query:

TRUNCATE logs